The “Blocked due to access forbidden (403)” error in Google Search Console occurs when Googlebot is denied access to certain pages or resources on a website. It’s indicated by a 403 status code, meaning the server understands the request but refuses access. Common causes include server restrictions, robots.txt directives, or content management settings. Resolving it involves granting Googlebot access to the restricted content by adjusting configurations or directives. Regular monitoring in Google Search Console helps maintain website visibility in search results.

What is Blocked due to access forbidden (403)

The “Blocked due to access forbidden (403)” error in Google Search Console indicates that Google’s web crawlers, also known as Googlebot, are unable to access certain pages or resources on your website. The error arises when the server receives the request from Googlebot but refuses to authorize it, resulting in a 403 status code.

This error can occur due to various reasons, including:

- Robots.txt Restrictions: Your website’s robots.txt file may contain directives that block Googlebot’s access to specific pages or directories.

- Server Restrictions: Server-level configurations, such as IP-based restrictions or firewall rules, may be preventing Googlebot from accessing your site.

- .htaccess File Restrictions: If you’re using an Apache web server, directives in the .htaccess file may be blocking Googlebot’s access.

- Content Restrictions: Certain content management systems, membership systems, or plugins may restrict access to certain pages or resources, causing the 403 error.

- URL Parameters: If your website uses URL parameters for dynamic content, Googlebot may have difficulty crawling URLs with these parameters, leading to the error.

Resolving the “Blocked due to access forbidden (403)” error involves identifying and addressing the specific cause. By ensuring that Googlebot has proper access permissions, reviewing server and website configurations, and monitoring Google Search Console for crawl errors, you can rectify the issue and improve your website’s visibility in search results.

How to Fix “Blocked Due to Access Forbidden (403)” Error in Google Search Console

Are you encountering the dreaded “Blocked due to access forbidden (403)” error in Google Search Console? Don’t panic! While it can be frustrating to see this message, it’s usually fixable with some troubleshooting and adjustments. In this guide, we’ll walk you through the steps to resolve this issue and get your website back on track for search engine visibility.

Understanding the Error

First things first, let’s understand what the “Blocked due to access forbidden (403)” error means. This error occurs when Google’s web crawlers are unable to access certain pages or resources on your website. The 403 status code indicates that the server understood the request, but it refuses to authorize it.

Step 1: Verify Googlebot’s Access

The first step is to ensure that Googlebot has access to your website. Check your website’s robots.txt file to make sure it’s not blocking Googlebot’s access. You can find your robots.txt file by navigating to www.yourwebsite.com/robots.txt. If you find any rules that block Googlebot (User-agent: Googlebot Disallow: /), modify them to allow access.

Step 2: Check for Server Restrictions

Next, investigate if there are any server-level restrictions causing the 403 error. Contact your web hosting provider or server administrator to check if there are any IP-based restrictions or firewall rules blocking Googlebot’s access. They can help you whitelist Google’s IP addresses or adjust server configurations to allow crawling.

Step 3: Review .htaccess File

If you’re using an Apache web server, the issue might be related to your .htaccess file. Look for any directives that could be blocking Googlebot’s access, such as deny rules or IP restrictions. Make sure there are no unintended restrictions preventing Googlebot from crawling your site.

Step 4: Check for Content Restrictions

Sometimes, the 403 error can occur due to content restrictions set up on your website. Review any access control mechanisms, membership systems, or plugins that might be restricting access to certain pages or resources. Ensure that Googlebot has access to all necessary content for indexing.

Step 5: Verify URL Parameters

If your website uses URL parameters for dynamic content, ensure that Googlebot can crawl URLs with these parameters. Use the URL Inspection tool in Google Search Console to check how Googlebot renders your pages with different parameters. Adjust your URL parameters settings if needed to ensure proper crawling and indexing.

Step 6: Monitor Google Search Console

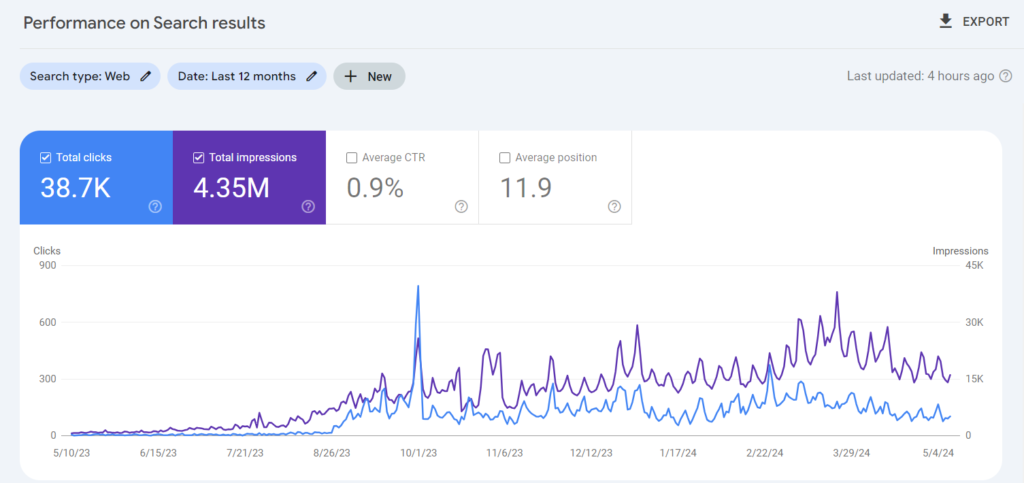

After making any adjustments, monitor Google Search Console for any changes in crawl errors. Use the Coverage report to check for any remaining “Blocked due to access forbidden (403)” errors. If you continue to encounter issues, double-check your configurations and seek assistance from web development or SEO experts if necessary.

Conclusion

Encountering the “Blocked due to access forbidden (403)” error in Google Search Console can be frustrating, but with the right troubleshooting steps, you can resolve the issue and ensure that Googlebot can crawl and index your website effectively. By verifying access permissions, checking server configurations, and reviewing content restrictions, you can address the underlying causes of the error and improve your website’s visibility in search results. Remember to monitor Google Search Console regularly for any new crawl errors and address them promptly to maintain a healthy and accessible website.

FAQs about the “Blocked due to access forbidden (403)” error in Google Search Console

What are the common causes of this error?

Common causes include restrictions set up at the server level, directives in the robots.txt file blocking Googlebot's access, content management systems imposing access restrictions, or URL parameters causing issues with crawling.

How can I resolve the "Blocked due to access forbidden (403)" error?

To resolve this error, you'll need to identify and address the specific cause. This may involve adjusting server configurations, modifying robots.txt directives, reviewing content management settings, or ensuring that URL parameters are crawlable by Googlebot.

How do I check if Googlebot has access to my website?

You can check your website's robots.txt file to see if there are any rules blocking Googlebot's access. Additionally, you can use the URL Inspection tool in Google Search Console to see how Googlebot renders your pages and if any access issues are detected.

What should I do if I continue to encounter this error after making changes?

If you continue to encounter the error after making adjustments, double-check your configurations and seek assistance from web development or SEO experts if necessary. Regular monitoring of Google Search Console for crawl errors is also recommended to address any ongoing access issues.

Can the "Blocked due to access forbidden (403)" error affect my website's search engine visibility?

Yes, if Googlebot is unable to access important pages or resources on your website due to this error, it can negatively impact your website's visibility in search engine results. Resolving the error and ensuring proper access for Googlebot is essential for maintaining and improving search engine visibility.